The course is a hands-on, research-level introduction to the areas of computer science that have a direct relevance to journalism, and the broader project of producing an informed and engaged public $100 installment loan. We study two big ideas: the application of computation to produce journalism (such as data science for investigative reporting), and journalism about areas that involve computation (such as the analysis of credit scoring algorithms.)

Alon the way we will touch on many topics: information recommendation systems but also filter bubbles, principles of statistical analysis but also the human processes which generate data, network analysis and its role in investigative journalism, visualization techniques and the cognitive effects involved in viewing a visualization.

Assignments will require programming in Python, but the emphasis will be on clearly articulating the connection between the algorithmic and the editorial. Research-level computer science material will be discussed in class, but the emphasis will be on understanding the capabilities and limitations of this technology.

Format of the class, grading and assignments.

This is a fourteen week, six point course for CS & journalism dual degree students. (It is a three point course for cross-listed students, who also do not have to complete the final project.) The class is conducted in a seminar format. Assigned readings and computational techniques will form the basis of class discussion. The course will be graded as follows:

- Assignments: 40%. There will be five homework assignments.

- Final project 40%: Dual students will be complete a medium-ish final project (others will have this 40% from assignments)

- Class participation: 20%

Assignments will involve experimentation with fundamental computational techniques. Some assignments will require intermediate level coding in Python, but the emphasis will be on thoughtful and critical analysis. As this is a journalism course, you will be expected to write clearly. The final project can be either a piece of software (especially a plugin or extension to an existing tool), a data-driven story, or a research paper on a relevant technique.

Dual degree students will also have a final project. This will be either a research paper, a computationally-driven story, or a software project. The class is conducted on pass/fail basis for journalism students, in line with the journalism school’s grading system. Students from other departments will receive a letter grade.

Week 1: High dimensional data – 9/12

CS techniques can help journalism in two main ways: using computation to do journalism, and doing journalism about computation. Either way, we’ll be working a lot with the abstraction of high dimensional vectors. We’ll start with an overview of interpreting high-dimensional data, then jump right into clustering and the document vector space model, which we’ll need to study natural language processing and recommendation engines.

References

- Computational Journalism, Cohen, Turner, Hamilton

- TF-IDF is about what matters, Aaron Schumacher

- Introduction to Information Retrieval Chapter 6, Scoring, Term Weighting, and The Vector Space Model, Manning, Raghavan, and Schütze.

- How ProPublica’s Message Machine reverse engineers political microtargeting, Jeff Larson

Viewed in class

Week 2: Text analysis – 9/19

We’ll start by picking up the story of text analysis in journalism, including the development of thew Overview document mining system. Then probabilistic topic modeling (ala LDA), matrix factorization, more general plate-notation graphical models, and word embedding approaches based on deep learning. Then on to fundamental recommendation approaches such as collaborative filtering. Bringing it to practice we will look at Columbia Newsblaster (a precursor to Google News) and the New York Times recommendation engine.

Required

- Tracking and summarizing news on a daily basis with Columbia Newsblaster, McKeown et al

- Topic modeling by hand, Shawn Graham

References

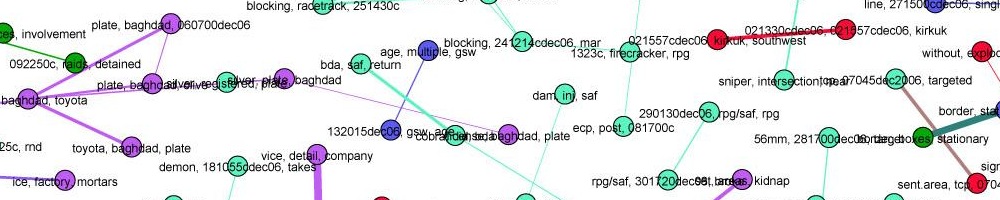

- A full-text visualization of the Iraq war logs, Jonathan Stray

- Document mining with the Overview prototype, includes visualizations of TF-IDF space

- Word2Vec tutorial: The Skip-gram Model, Chris McCormick

- Generating News Headlines with Recurrent Neural Networks, Konstantin Lopyrev

Discussed in class

- More than a Million Pro-Repeal Net Neutrality Comments were Likely Faked, Jeff Kao. Use of word embeddings (word2vec) in the public interest.

- What do journalists do with documents? Jonathan Stray

- Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings, Bolukbasi et. al.

- Making Downton more traditional, Ben Schmidt

Assignment: LDA analysis of State of the Union speeches.

Week 3: Filter Design

We’ve studied filtering algorithms, but how are they used in practice — and how should they be? We will study the details of several algorithmic filtering approaches used by social networks, and effects such as polarization and filter bubbles.

Readings

- Who should see what when? Three design principles for personalized news Jonathan Stray

- How Facebook’s Foray into Automated News Went from Messy to Disastrous, Will Oremus

References

- How Reddit Ranking Algorithms Work, Amir Salihefendic

- Item-Based Collaborative Filtering Recommendation Algorithms, Sarwar et. al

- Matrix Factorization Techniques for Recommender Systems, Koren et al

- Building the Next New York Times Recommendation Engine, Alexander Spangher

- Reuters Tracer: A Large Scale System of Detecting & Verifying Real-Time News Events from Twitter, Liu et al.

- How does Google use human raters in web search?, Matt Cutts

Viewed in class

- Recommending items to more than a billion people, Facebook

- Israel, Gaza, War & Data: social networks and the art of personalizing propaganda, Gilad Lotan

- Exposure to Diverse Information on Facebook, Bakshy et al

Assignment 2: Design a filtering algorithm for an information source of your choosing

Week 4: Quantification and Statistical Inference

We’ll begin with the most neglected topic in statistics: measurement. We’ll take a detailed look at the question of what to count, and how to “interview the data” to check for data quality. Then we’ll move on to risk ratios, one of the simplest statistical models and a key idea in accountability. We’ll continue with a look at the uses of multi-variable regression in journalism, and study graphical causal models to help untangle the whole correlation/causation thing.

Required:

- The Quartz Guide to Bad Data, Christopher Groskopf

- The Curious Journalist’s Guide to Data: Quantification, Jonathan Stray

Recommended

- Operationalizing, or the function of measurement in modern literary theory, Franco Moretti. A great discussion of quantification of complex concepts.

- What data can’t tell us about buying politicians, Stray. Why we need need risk ratios to talk about corruption.

- If correlation doesn’t imply causation, then what does?, Michael Nielsen

Viewed in class

- Speed Trap: Who gets a ticket, who gets a break? Boston Globe. A classic use of regression.

- How we measured surgical complications, ProPublica. A more recent, extremely sophisticated use of regression.

Week 5: Algorithmic Accountability and Discrimination

Algorithmic accountability is the study of the algorithms that regulate society, from high frequency trading to predictive policing. We’re at their mercy, unless we learn how to investigate them. We’ll review previous work in this area, then start our study of algorithmic discrimination. Analyzing discrimination data is more subtle and complex than it might seem.

Required

- How We Analyzed the COMPAS Recidivism Algorithm, Larson et al.

- Bias In, Bias Out, Sandra Mayson

- Our course notebook: Machine Bias

References

- How Algorithms Shape our World, Kevin Slavin

- Big Data’s Disparate Impact, Barocas and Selbst

- Testing for Racial Discrimination in Police Searches of Motor Vehicles, Simoiu et al

Viewed in class

- Should Prison Sentences Be Based On Crimes That Haven’t Been Committed Yet?, FiveThirtyEight. Nice interactive visualization of risk scores.

- How the Journal Tested Prices and Deals Online, Jeremy Singer-Vine, Ashkan Soltani and Jennifer Valentino-DeVries

- How Uber surge pricing really works, Nick Diakopoulos

Week 6: Quantitative Fairness

Most algorithmic accountability and AI fairness work so far has been concerned with “bias,” but what is that? The answer is more complex than it might seem. In this class we’ll discuss the many definitions of fairness and show that they mostly boil down to three different formulations. We’ll also discuss everything around the algorithm, including how the results are used and what the training data means.

Required:

- Fairness in Machine Learning, NIPS 2017 Tutorial by Solon Barocas and Moritz Hardt

- A case study of algorithm-assisted decision making in child maltreatment hotline screening decisions, Chouldechova et al.

- A Child Abuse Prediction Model Fails Poor Families, Wired

References

- How Big Data is Unfair, Moritz Hardt

- Testing for Racial Discrimination in Police Searches of Motor Vehicles, Simoiu et al

- Sex Bias in Graduate Admissions: Data from Berkeley, P. J. Bickel, E. A. Hammel, J. W. O’Connell. The classic paper on Simpson’s Paradox.

Week 7: Randomness and Significance

The notion of randomness is crucial to the idea of statistical significance. We’ll talk about determining causality, p-hacking and reproducibility, and the more qualitative, closer-to-real-world method of triangulation.

Required

- The Curious Journalist’s Guide to Data: Analysis, Jonathan Stray

- I Fooled Millions Into Thinking Chocolate Helps Weight Loss. Here’s How, John Bohannon

Recommended

- Why most published research findings are false, John P. A. Ioannidis

- Why Betting Data Alone Can’t Identify Match Fixers In Tennis, FiveThirtyEight

- Solve Every Statistics Problem with One Weird Trick, Jonathan Stray. A lightning talk on randomization.

- The Introductory Statistics Course: a Ptolemaic Curriculum, George W. Cobb. The argument for randomization instead of classic analytical statistics.

Viewed in class

- How not to be misled by the jobs report, Neil Irwin and Kevin Quealy. Noise in official figures.

- Science Isn’t Broken, Christie Aschwanden. P-Values and the replication crisis.

- The Psychology of Intelligence Analysis, chapter 8. Richards J. Heuer

Week 8: Visualization, Network Analysis

Visualization helps people interpret information. We’ll look at design principles from user experience considerations, graphic design, and the study of the human visual system. Network analysis (aka social network analysis, link analysis) is a promising and popular technique for uncovering relationships between diverse individuals and organizations. It is widely used in intelligence and law enforcement, and inreasingly in journalism.

Readings

- Visualization, Tamara Munzner

- Network Analysis in Journalism: Practices and Possibilities, Stray

References

- 39 Studies about Human Perception in 30 minutes, Kennedy Elliot

- Overview: The Design, Adoption, and Analysis of a Visual Document Mining Tool For Investigative Journalists, Brehmer et al.

- Visualization Rhetoric: Framing Effects in Narrative Visualization, Hullman and Diakopolous

- Analyzing the Data Behind Skin and Bone, ICIJ

- Identifying the Community Power Structure, an old handbook for community development workers about figuring out who is influential by very manual processes.

- The Dynamics of Protest Recruitment through an Online Network, Sandra González-Bailón, et al.

- Simmelian Backbones: Amplifying Hidden Homophily in Facebook Networks. A soophisticated and sociologically-aware network analysis method.

Examples:

- The network of global corporate control, Vitali et. al.

- Galleon’s Web, Wall Street Journal

- Muckety

- Theyrule.net,

Assignment: Compare different centrality metrics in Gephi.

Week 9: Knowledge representation

How can journalism benefit from encoding knowledge in some formal system? Is journalism in the media business or the data business? And could we use knowledge bases and inferential engines to do journalism better? This gets us deep into the issue of how knowledge is represented in a computer. We’ll look at traditional databases vs. linked data and graph databases, entity and relation detection from unstructured text, and traditional both probabilistic and propositional formalisms. Plus: NLP in investigative journalism, automated fact checking, and more.

Readings

- Identifying civilians killed by police with distantly supervised entity-event extraction, Keith et. al

- Extracting References from Political Speech Auto-Transcripts, Brandon Roberts

References

- A fundamental way newspaper websites need to change, Adrian Holovaty

- Relation extraction and scoring in DeepQA – Wang et al, IBM

- The State of Automated Fact Checking, Full Fact

- Storylines as Data in BBC News, Jeremy Tarling

- Building Watson: an overview of the DeepQA project

Viewed in class

- The next web of open, linked data – Tim Berners-Lee TED talk

- https://schema.org/NewsArticle

- Connected China, Reuters/Fathom

Assignment: Text enrichment experiments using OpenCalais entity extraction.

Week 10: Truth and Trust

Credibility indicators and schema. Information operations. Fake news detection and automated fact checking. Tracking information flows.

Readings

- Automated fact-checking has come a long way. But it still faces significant challenges, Poynter

- Information Operations and Facebook, Stamos

- Defense Against the Dark Arts: Networked Propaganda and Counter-Propaganda, Stray

References

- The Credibility Coalition is working to establish the common elements of trustworthy articles, journalism.co.uk

- Checking in with the Facebook fact-checking partnership, Ananny

11: Privacy, Security, and Censorship

Who is watching our online activities? Who gets to access to all of this mass intelligence, and what does the ability to survey everything all the time mean both practically and ethically for journalism? In this lecture we cover both the basics of digital security, and methods to deal with specific journalistic situations — anonymous sources, handling leaks, border crossings, and so on.

Readings

References

- CPJ journalist security guide section 3, Information Security

- Global Internet Filtering Map, Open Net Initiative

- Tor Project Overview

- Who is harmed by a real-names policy, Geek Feminism

Viewed in Class

- A World Without Wizards: On Facebook and Cambridge Analytica, Dave Karpf

- Allen Dulles’ 73 Rules of Spycraft

- The Mysterious Printer Code That Could Have Led the FBI to Reality Winner, The Atlantic

Week 12: Final Project Presentations